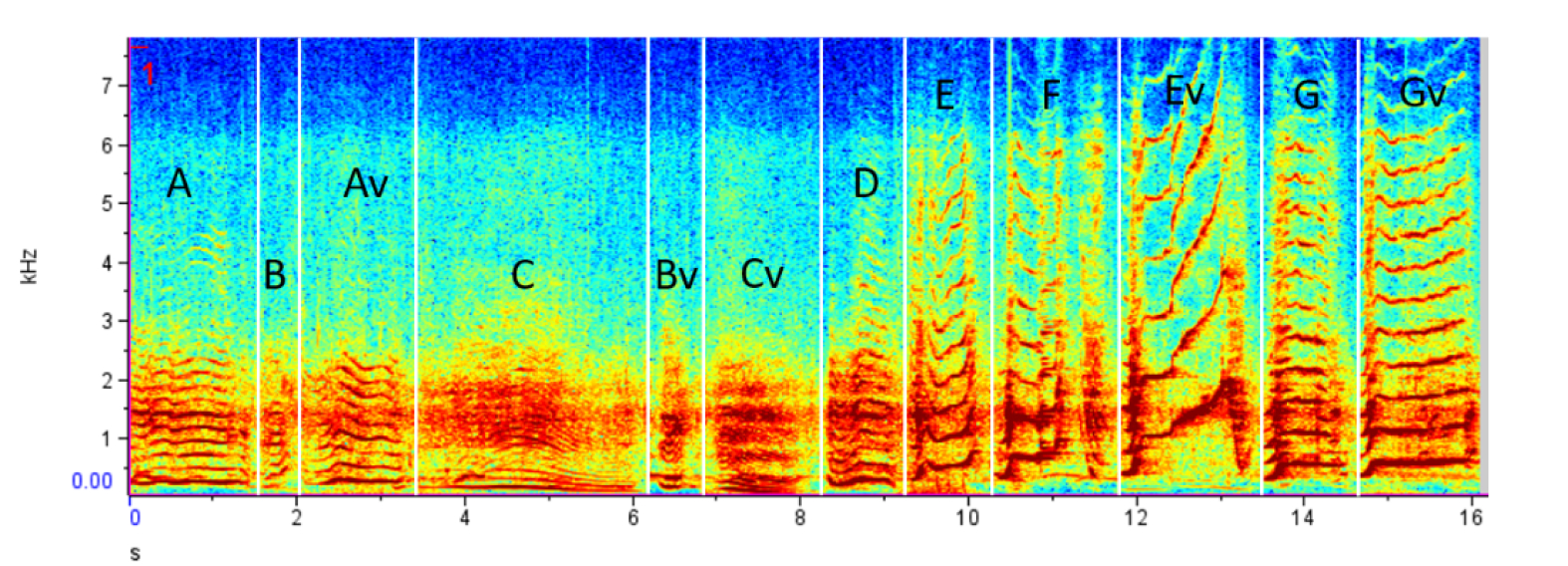

We find marine species vocalizations using state-of-the-art acoustic AI technology.

Searching for vocalisations in a sea of background noise is tedious and time-consuming. However, our models could save you time and effort.

We have a framework for training deep-learning models suitable for acoustic detection and classification. Our experienced research and development team keeps improving our capabilities to ensure we’re always aligned with the state-of-the-art and best practices of acoustic classification.

For more information about our framework for acoustic research, see our repository.

Our people are important to us much as the whales!

In Deep Voice, we value human knowledge and capabilities and wish to enjoy human-machine interaction to the fullest. We provide active learning mechanisms for more efficient human annotation of training data to reduce the annotations needed and ensure they provide the best possible result. Also, it’s possible to collaborate with our annotation team to aid in the annotation and data preparation process for the models.

Our expert team can help you manually annotate data, regardless of our AI services.